Each Task Pair consists of three core components:

first_frame.png

Starting point or problem setup

final_frame.png

Goal state or expected solution

prompt.txt

Instructions for video model

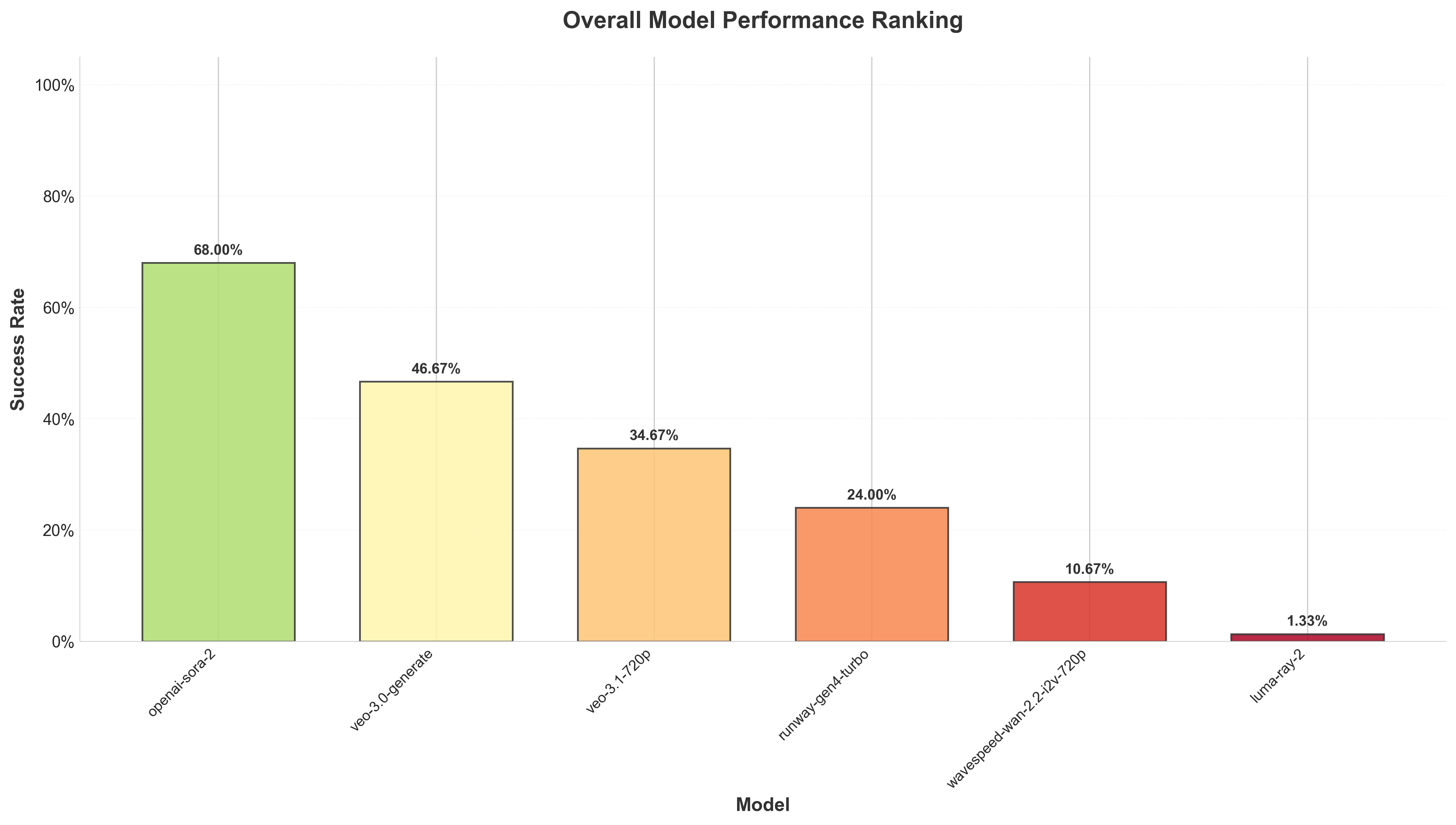

We introduce a benchmark that evaluates the reasoning abilities of video models across multiple tasks. We find that Sora-2 achieves a success rate above 60%. Our experiments establish a clear performance hierarchy across different model architectures and training methods. We develop a robust and scalable evaluation paradigm, together with a unified and modular VMEvalKit framework that supports diverse task generation and highly reliable assessments. The framework provides clear success signals, creating opportunities for future reinforcement learning (RL) fine-tuning to further improve reasoning consistency.

40+ video generation models across 11 provider families

9 cognitive task types with procedural generation

Each Task Pair consists of three core components:

first_frame.png

Starting point or problem setup

final_frame.png

Goal state or expected solution

prompt.txt

Instructions for video model

Video models must generate legal chess moves and visualize complete game progression. This tests spatial reasoning, rule understanding, and sequential planning abilities.

Models navigate from start to goal through complex maze structures. This evaluates path-finding capabilities and spatial memory in video generation.

@misc{VMEvalKit,

author = {VMEvalKit Team},

title = {VMEvalKit: A framework for evaluating reasoning abilities in foundational video models},

year = {2025},

howpublished = {\url{https://github.com/Video-Reason/VMEvalKit}}

}